Take a simple sentence: The quick brown fox jumps over the lazy dog. For a human, reading this is second nature. We see words, we understand their meaning, and we grasp the context. But for a computer, this sentence is initially nothing more than a sequence of characters—a string of bytes. Before any artificial intelligence can begin to parse grammar, identify sentiment, or translate text, it must first perform a fundamental, almost brutal act: it must shatter the sentence into pieces. This process, the crucial first step in nearly all Natural Language Processing (NLP), is called tokenization.

And while it might sound as simple as “splitting the sentence by spaces,” this seemingly trivial task is a fascinating rabbit hole of linguistic complexity, revealing just how different human languages can be.

What is Tokenization? More Than Just Splitting Spaces

At its core, tokenization is the process of breaking down a stream of text into smaller, meaningful units called “tokens.” Most often, these tokens are words, but they can also be punctuation marks, numbers, or even parts of words. For our simple sentence, a basic tokenizer might produce this list of tokens:

[ "The", "quick", "brown", "fox", "jumps", "over", "the", "lazy", "dog", "." ]

Notice that the period (.) is its own token. This is important. For a machine to understand that the sentence has ended, it needs to see the period as a distinct entity, not just a bit of ink stuck to the word “dog.” By converting a sentence into a structured list of tokens, we give the AI a foothold. It can now count the words, analyze their frequency, and begin to look for grammatical patterns. This is the foundation upon which search engines, chatbots, and translation services are built.

But the moment we step away from perfectly clean sentences, the illusion of simplicity evaporates.

The English Puzzle Box: Punctuation, Contractions, and Hyphens

English, with its quirks and exceptions, presents a minefield for tokenization algorithms. What seems obvious to us requires careful, deliberate rules for a machine.

The Case of the Curious Contraction

Consider the word “don’t.” Should it be a single token? Or should it be split into two?

- As one token:

["don't"]. This is simple, but the AI loses a critical piece of information: the presence of the word “not.” - As two tokens:

["do", "n't"]. This is more common in advanced NLP. It preserves the root verb “do” and the negation “n’t” (a special token representing “not”).

Similarly, “I’m” becomes ["I", "'m"] to represent “I am,” and “we’ve” becomes ["we", "'ve"] for “we have.” This seemingly small distinction is vital for an AI to correctly understand the grammar and meaning of a sentence. It has to be explicitly taught that n't signals negation.

The Hyphen’s Headache

Hyphens are another source of ambiguity. How should a tokenizer handle a term like “state-of-the-art”?

- If it’s treated as a single token,

["state-of-the-art"], the machine understands it as a single, unique adjective. This is often the desired outcome. - If it’s split by the hyphen,

["state", "-", "of", "-", "art"], the meaning is lost.

But what about a word like “re-evaluate”? Here, splitting it into its morphemes—the smallest meaningful units—could be beneficial: ["re", "evaluate"]. This helps the AI recognize the prefix “re-” and connect the word to its root, “evaluate.” There is no one-size-fits-all answer; the right choice depends entirely on the final application.

Beyond the Latin Alphabet: When Spaces Disappear

If English is a puzzle box, some other languages are escape rooms. Many writing systems around the world do not use spaces to separate words, forcing tokenization to become a much more sophisticated act of linguistic detective work.

Consider Chinese. The sentence “我爱北京天安门” (Wǒ ài Běijīng Tiān’ānmén) means “I love Tiananmen Square in Beijing.” To an English speaker, it looks like a solid block of characters. There are no spaces to guide us. A simple space-based tokenizer would fail completely. Instead, tokenizers for Chinese must use complex algorithms, often backed by enormous dictionaries and machine learning models, to identify word boundaries. The sentence must be correctly segmented like this:

[ "我", "爱", "北京", "天安门" ] (I, love, Beijing, Tiananmen)

Getting this wrong can drastically alter the meaning. Japanese presents a similar challenge, as it mixes three different scripts (Kanji, Hiragana, and Katakana) with no spaces. Thai, Lao, Khmer, and Tibetan are other prominent examples of languages where word segmentation is a major hurdle that must be cleared before any other processing can occur.

The Word-Sentences: The Challenge of Agglutination

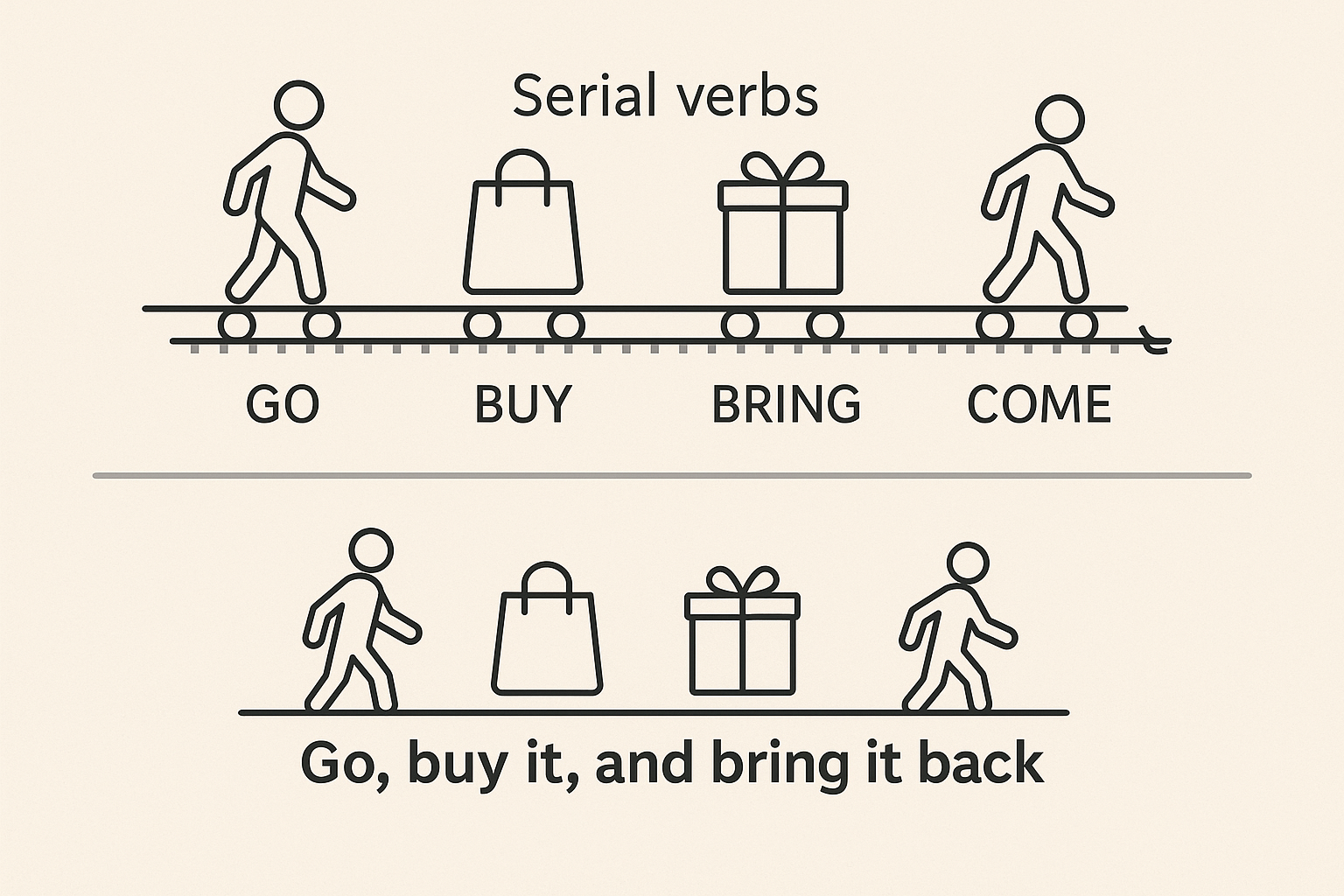

Then there are agglutinative languages, where the very concept of a “word” becomes wonderfully fluid. In languages like German, Turkish, Finnish, and Hungarian, it’s common to stick multiple morphemes together to form a single, incredibly long word that can translate to an entire sentence in English.

German is famous for its compound nouns. A word like Donaudampfschifffahrtsgesellschaftskapitän is a single, valid token meaning “Danube steamship company captain.” To understand its meaning, a tokenizer might need to break it down into its constituent parts: Donau (Danube), dampf (steam), schiff (ship), and so on.

Turkish takes this to another level. The word-sentence Çekoslovakyalılaştıramadıklarımızdan mısınız? translates to, “Are you one of those people whom we could not make to be Czechoslovakian?” This single “word” contains a root (“Çekoslovakya”), followed by a series of suffixes that add concepts of “make into,” “unable to,” “past tense,” “plural,” and a final question particle. For an AI to process Turkish, tokenizing on the word level is not enough; it must perform sub-word tokenization to unpack the rich grammatical information encoded within each word.

The Invisible Labor Powering Our Digital World

Every time you type a query into a search engine, ask a chatbot a question, or use a translation app, this intricate process of tokenization happens in the background, invisibly and in milliseconds. It is the silent, foundational labor that makes our digital communication possible. It’s a field where computer science meets deep linguistics, where programmers must become amateur linguists, and linguists provide the critical insights that make the code work.

So the next time you interact with an AI, remember that before it could even begin to “think,” it first had to learn the ancient art of shattering sentences. It had to learn that what separates words isn’t always a space, that a single word can be a sentence, and that the humble apostrophe holds the power of negation. In the world of AI, understanding begins with breaking things apart.