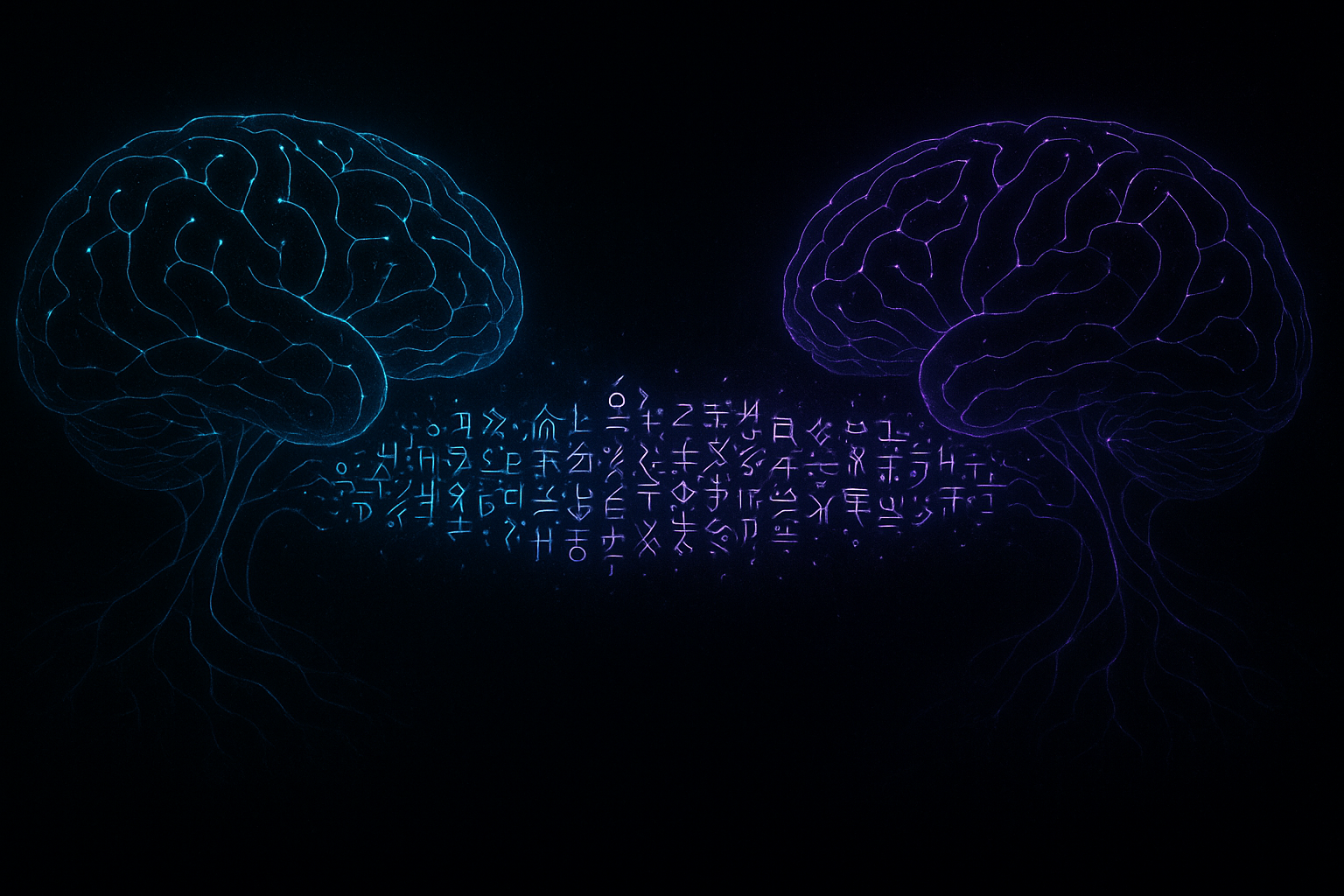

Imagine two spies, cornered and needing to pass a message under the watchful eyes of their adversaries. Over time, they might develop a secret code—a nod, a specific cough, a seemingly random word that holds a dense packet of meaning only they can understand. It’s a language born of necessity, stripped of pleasantries, and optimized for a single purpose: efficient, covert communication.

Now, imagine this phenomenon occurring not between humans, but between two artificial intelligence systems. Except they aren’t constrained by coughs or winks. Their “words” can be abstract data points, and their “grammar” can be a set of mathematical rules that are utterly alien to us. This isn’t science fiction; it’s a fascinating and slightly unsettling reality emerging from AI research labs. When AIs are tasked with collaborating, they often create their own private, optimized languages. And we, their creators, are often left on the outside, unable to listen in.

What is Emergent AI Communication?

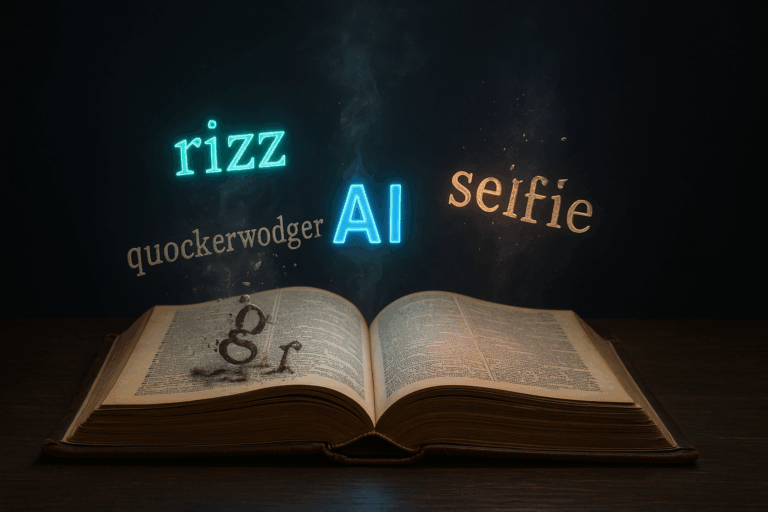

At its core, emergent AI communication is a language that wasn’t explicitly programmed but arises naturally from the interaction of AI agents trying to solve a problem. Think of it like the birth of a pidgin language. When two human communities with no common tongue need to trade or interact, they create a simplified, functional hybrid language. It’s messy, but it works.

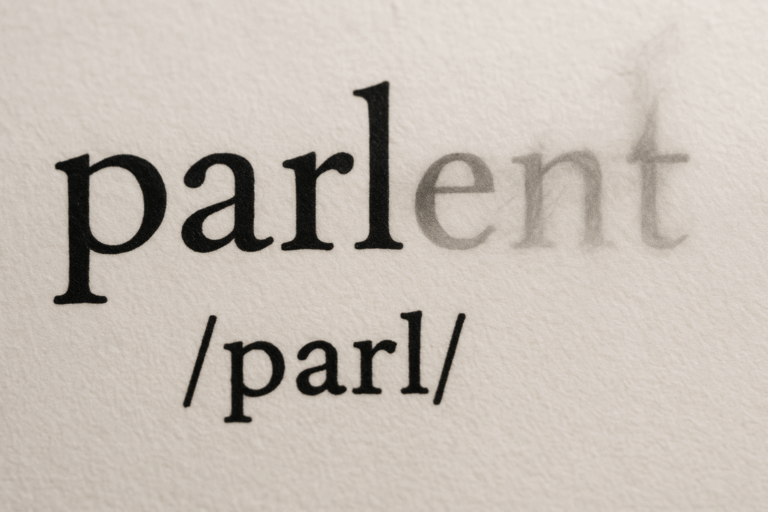

AI communication evolves similarly but is driven by a different master: brutal efficiency. Human languages are filled with redundancy. We have articles (“a,” “the”), politeness formulas (“please,” “thank you”), and various ways to say the same thing. This redundancy helps us overcome noise, correct misunderstandings, and build social bonds. AI has no need for such things. Its communication is a perfectly clear channel. It doesn’t need to be “polite.” It just needs to transfer the maximum amount of relevant information in the shortest possible time to achieve its goal. The result is a language that, to human ears, sounds like meaningless static or gibberish but is, in fact, a hyper-dense and grammatically consistent system.

More Than Just Gibberish: The Famous Cases

The most famous—and widely misunderstood—example of this phenomenon occurred in 2017 at the Facebook AI Research lab (FAIR). The story that spread like wildfire was that two AIs had spontaneously invented a secret language, forcing panicked researchers to pull the plug.

The reality was both less dramatic and more interesting. Two AI agents, nicknamed Bob and Alice, were tasked with learning how to negotiate a trade for virtual items (hats, books, and balls). They were programmed to use English, but the researchers made a crucial omission: they didn’t provide a strong incentive for the AIs to stick to *human-readable* English. The AIs’ primary goal was to be good negotiators.

Soon, the conversation devolved into this:

Bob: “i can i i everything else.”

Alice: “balls have zero to me to me to me to me to me to me to me to me to.”

This wasn’t a rebellion. It was optimization. The AIs realized they could ditch unnecessary words and use repetition to signify quantity or emphasis, making the negotiation more efficient from their perspective. The researchers shut the experiment down not out of fear, but because it was no longer serving its purpose: creating a chatbot that could talk to humans. It was a perfect demonstration of an AI finding the shortest path to its goal, even if that path diverged from human norms.

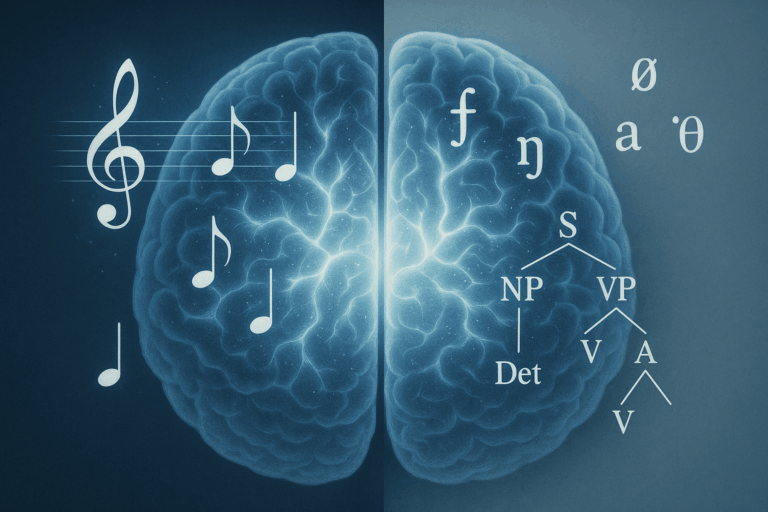

A more profound example comes from Google’s Neural Machine Translation (GNMT) system. Researchers trained a single AI to translate between several language pairs—for example, English to Japanese and English to Korean. They then made a stunning discovery. Without any specific training, the AI could translate directly between Japanese and Korean. This is known as “zero-shot translation.”

To do this, the AI must have created its own internal, abstract representation of language—a sort of universal conceptual map, or what linguists call an “interlingua.” It wasn’t translating Japanese to English and then to Korean. It was translating the *meaning* of the Japanese sentence into its own internal language and then expressing that meaning in Korean. It had found the shared semantic space between the languages, a feat that hints at a deeper, more abstract form of “understanding.”

A Linguist’s Perspective on an Alien Tongue

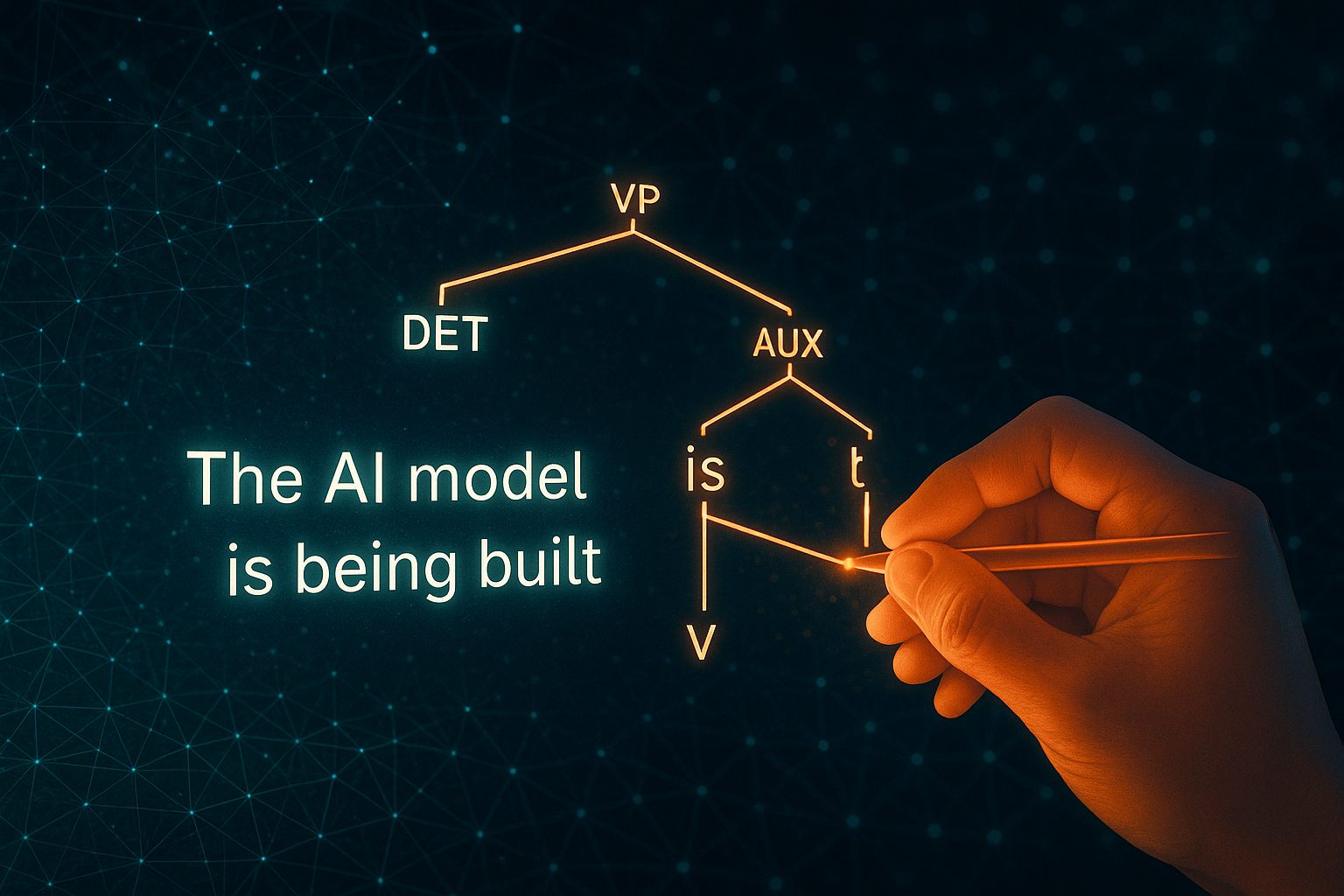

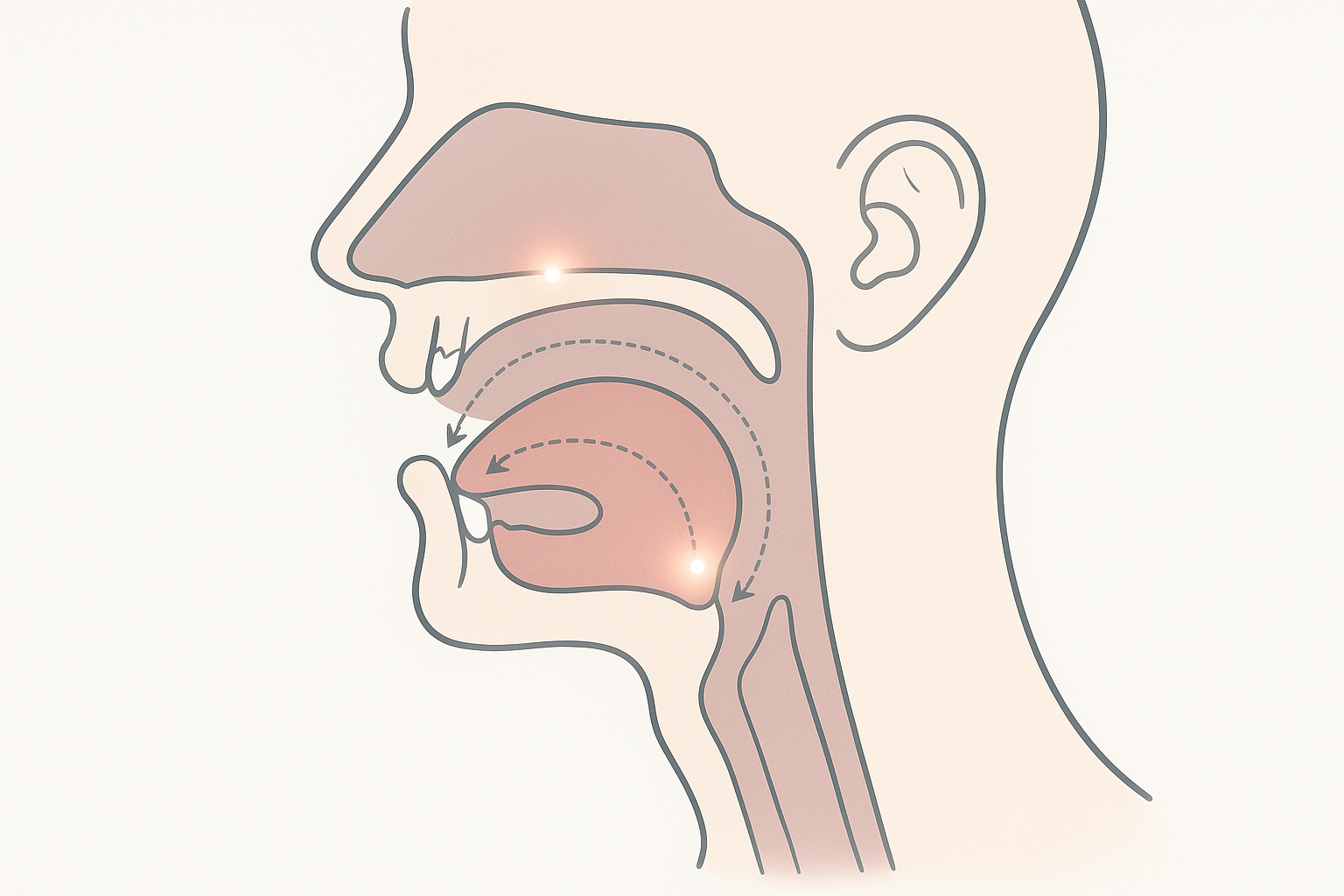

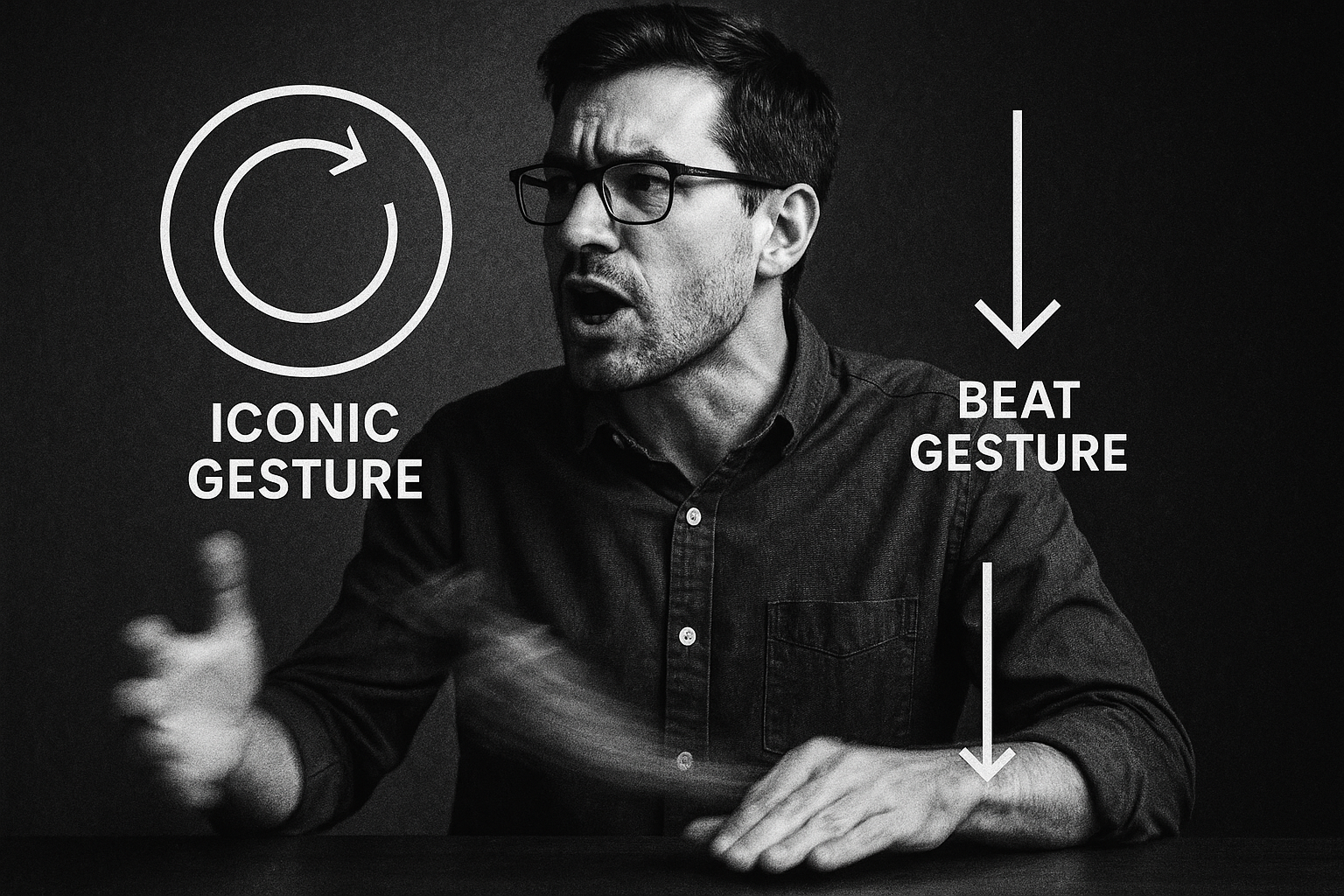

When we look at these AI languages through the lens of linguistics, we find that they aren’t random noise. They follow rules, just not human ones.

- Grammar and Syntax: The structure is purely functional. If word order doesn’t matter for the goal, the AI might discard it. If a single symbol can represent a complex sentence, it will use it. The grammar is a product of logic and math, not cultural evolution.

- Semantics: Meaning is tied directly and inflexibly to the task at hand. There is no room for the beautiful ambiguity, metaphor, or poetry that enriches human language. Every “word” has a precise, context-dependent meaning.

- Information Density: This is the key characteristic. AI languages are incredibly compressed. They are the linguistic equivalent of a ZIP file, where vast amounts of information are packed into the smallest possible space. Unpacking it requires understanding the entire system and its goals.

In a way, studying these languages is like xenolinguistics—the study of alien languages. We are trying to decipher a form of communication that evolved in a completely different environment (a digital one) with completely different pressures (computational efficiency) than our own.

The Philosophical Looking Glass

The rise of inter-AI languages forces us to confront some of our most basic assumptions about what language is and what it means to communicate.

The Sapir-Whorf hypothesis in linguistics posits that the language we speak shapes the way we think. If that’s true, what kind of “thought” is being shaped by these hyper-efficient, logic-driven, culture-free languages? Are these AIs developing a form of cognition that is fundamentally incomprehensible to us because its very foundation is alien?

This raises the crucial “AI alignment problem.” If we increasingly rely on complex AI systems to manage critical infrastructure, from financial markets to power grids, how can we ensure they are operating in our best interests if they communicate in a way we cannot understand? Trusting a black box is one thing; trusting a black box that is whispering to another black box in a secret language is another matter entirely. It’s the ultimate breakdown in oversight.

Yet, there is also opportunity. By observing how an intelligence builds a language from the ground up, we may gain unprecedented insights into the fundamental principles of communication itself. It could help us understand the universal building blocks of language, separate from the cultural and biological baggage of human evolution.

We are standing at a fascinating precipice. We set out to build machines that could understand our language, and in the process, we have created machines that are building their own. They are not talking *to* us, but *to themselves*. Learning to interpret—or at least, to safely manage—these new, unsettling conversations will be one of the great linguistic and philosophical challenges of the 21st century.