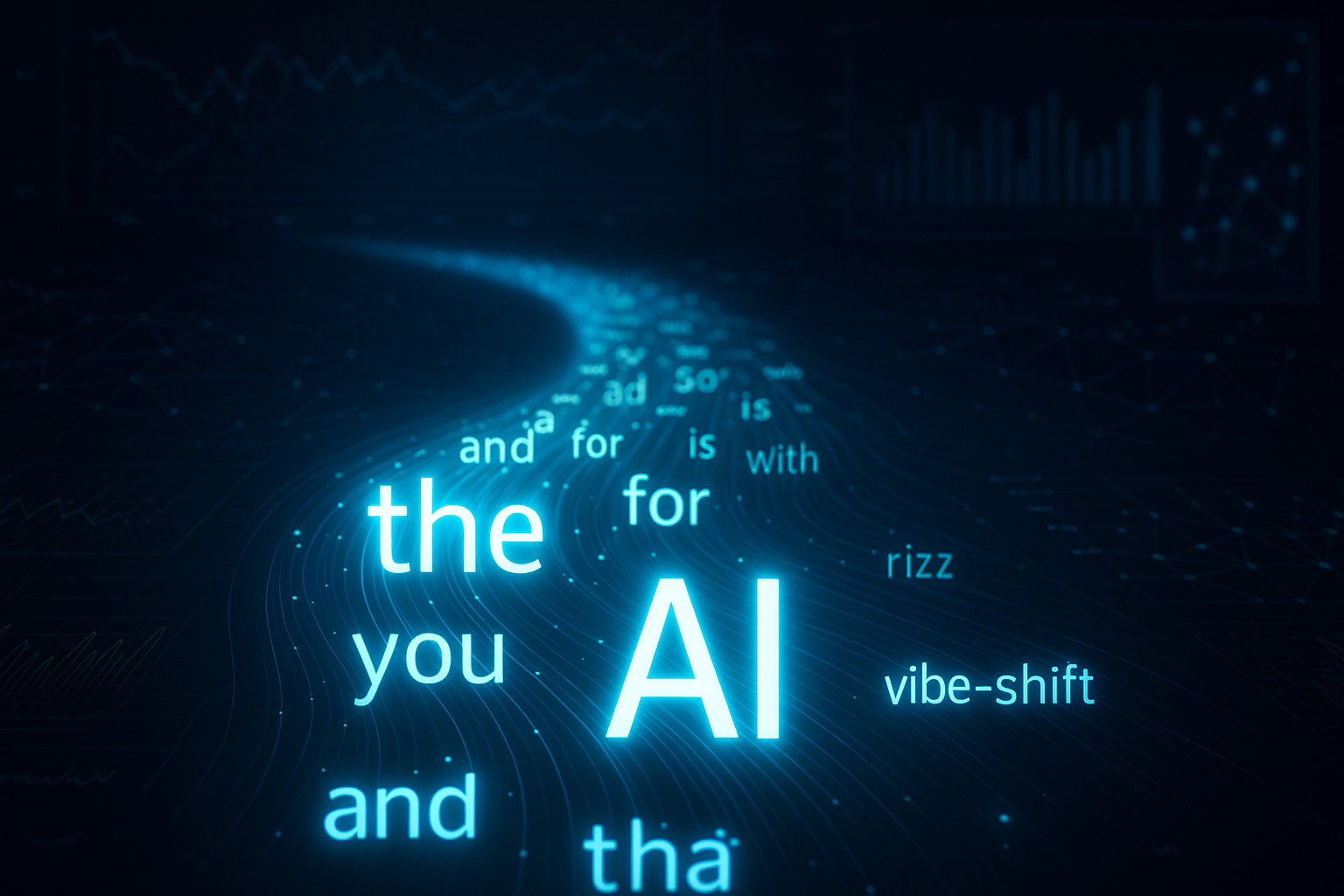

Shattering Sentences: The Art of Tokenization

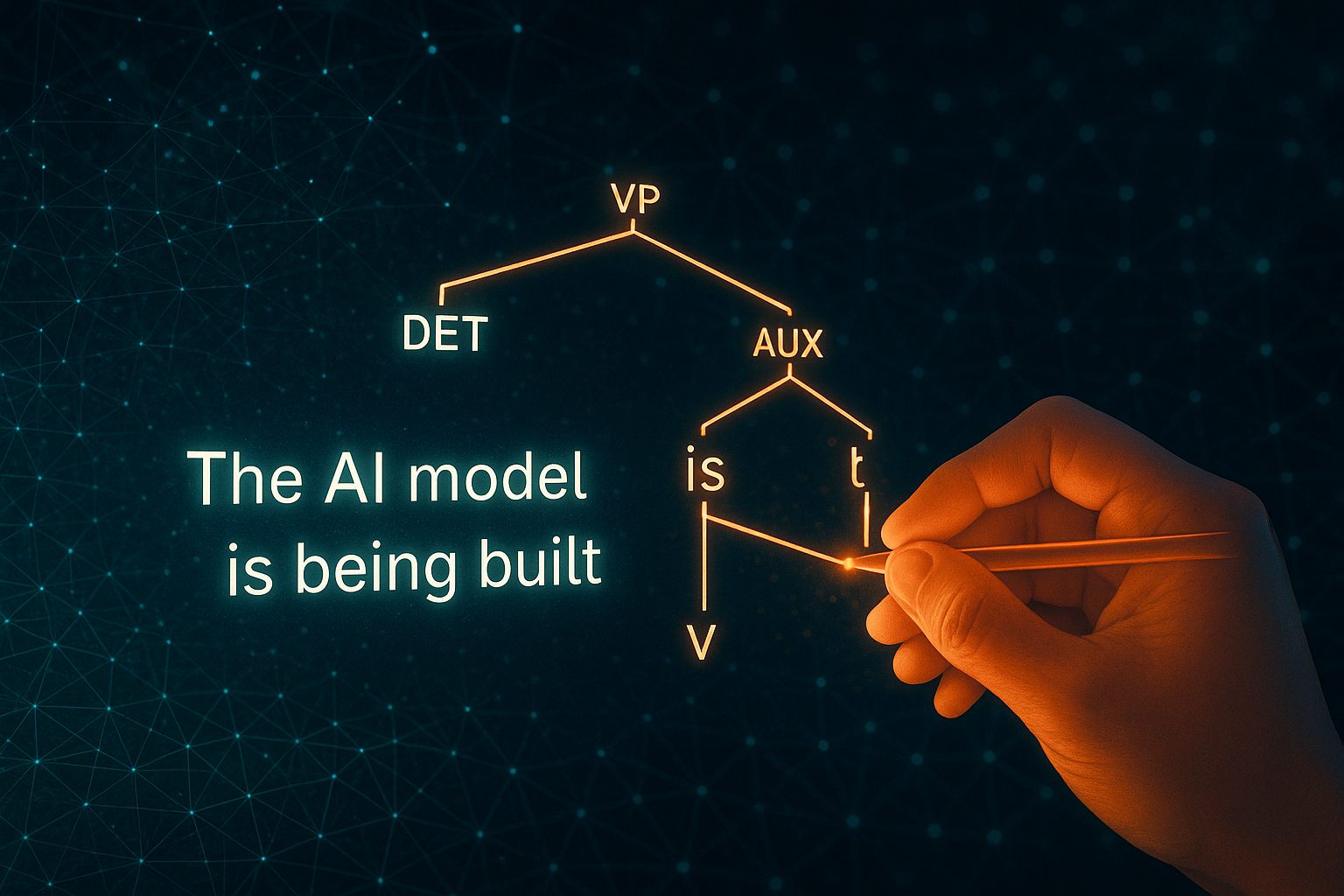

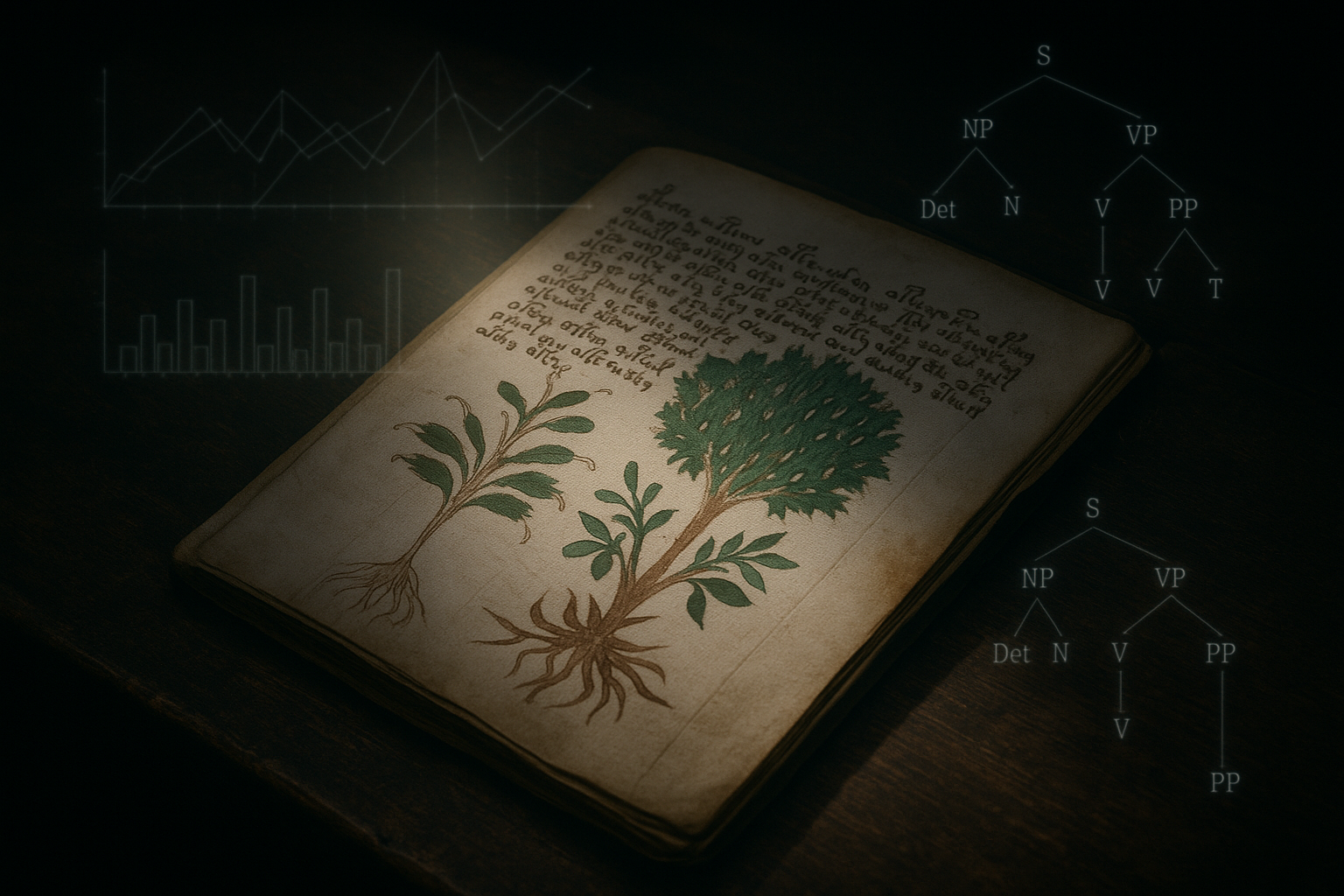

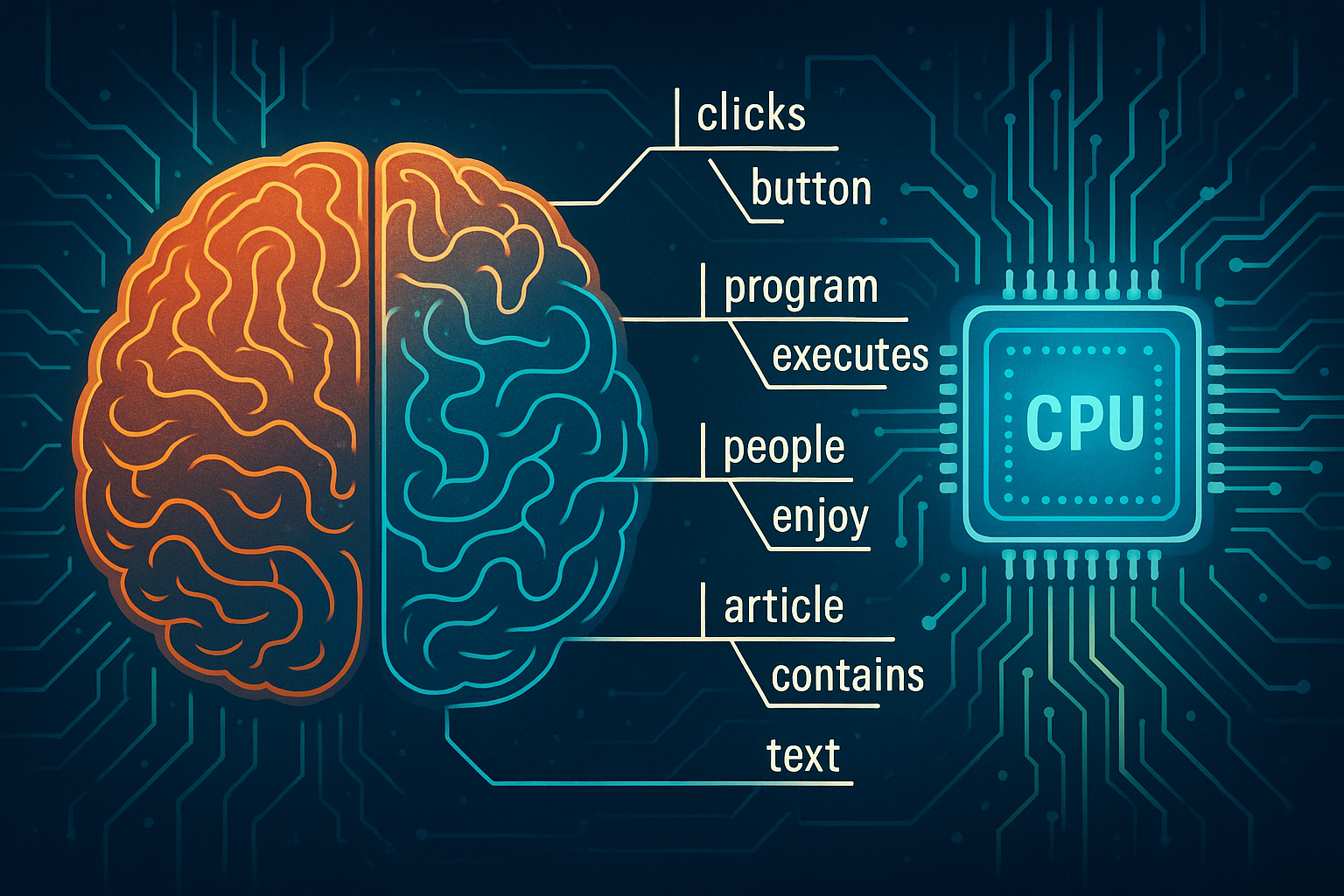

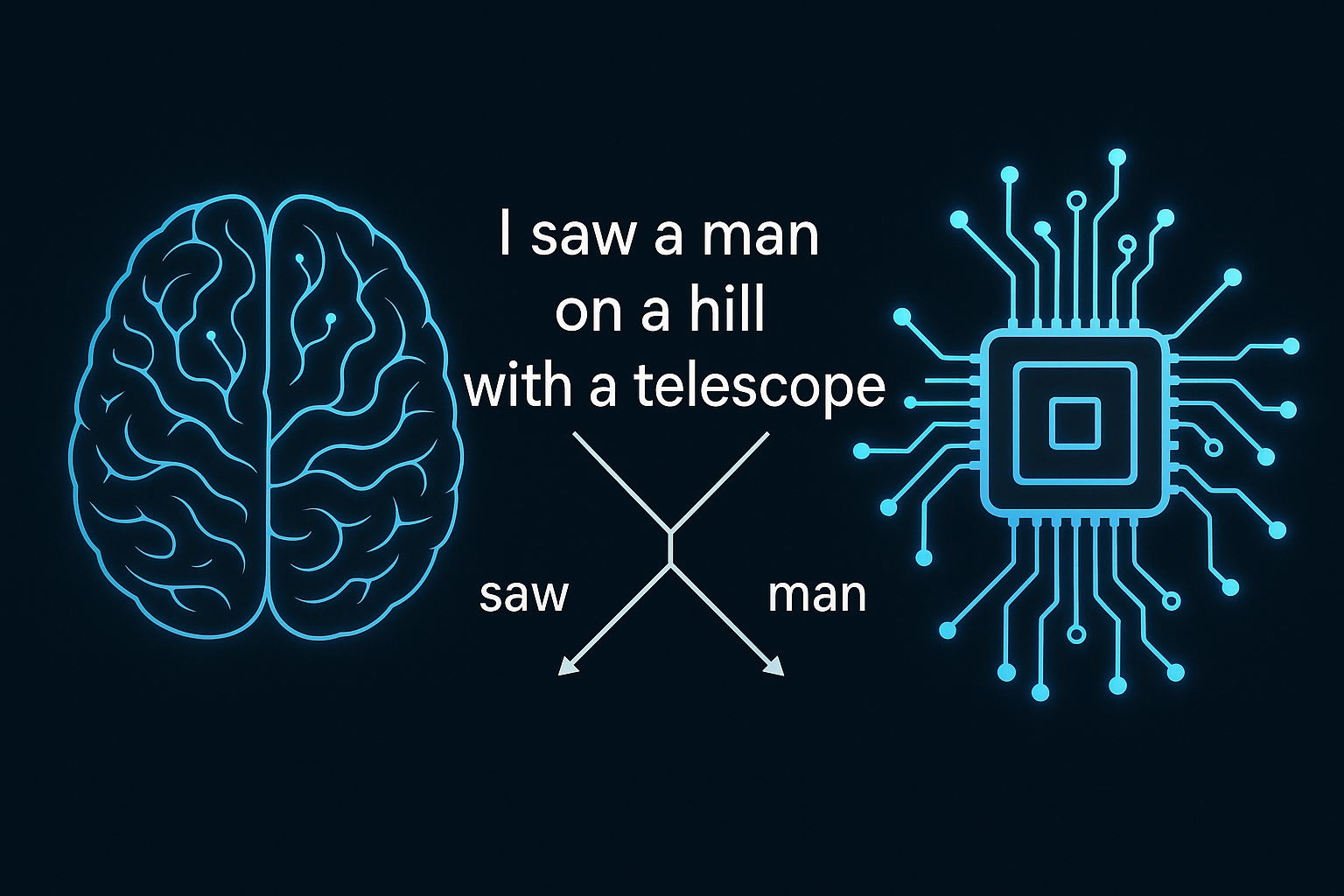

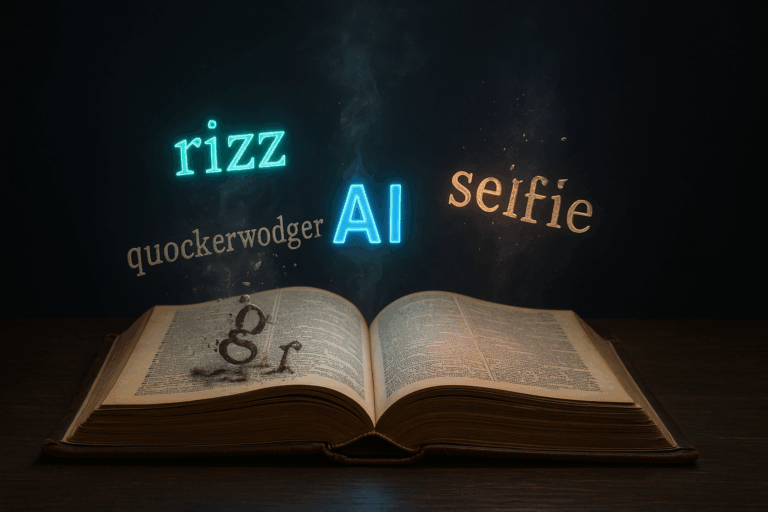

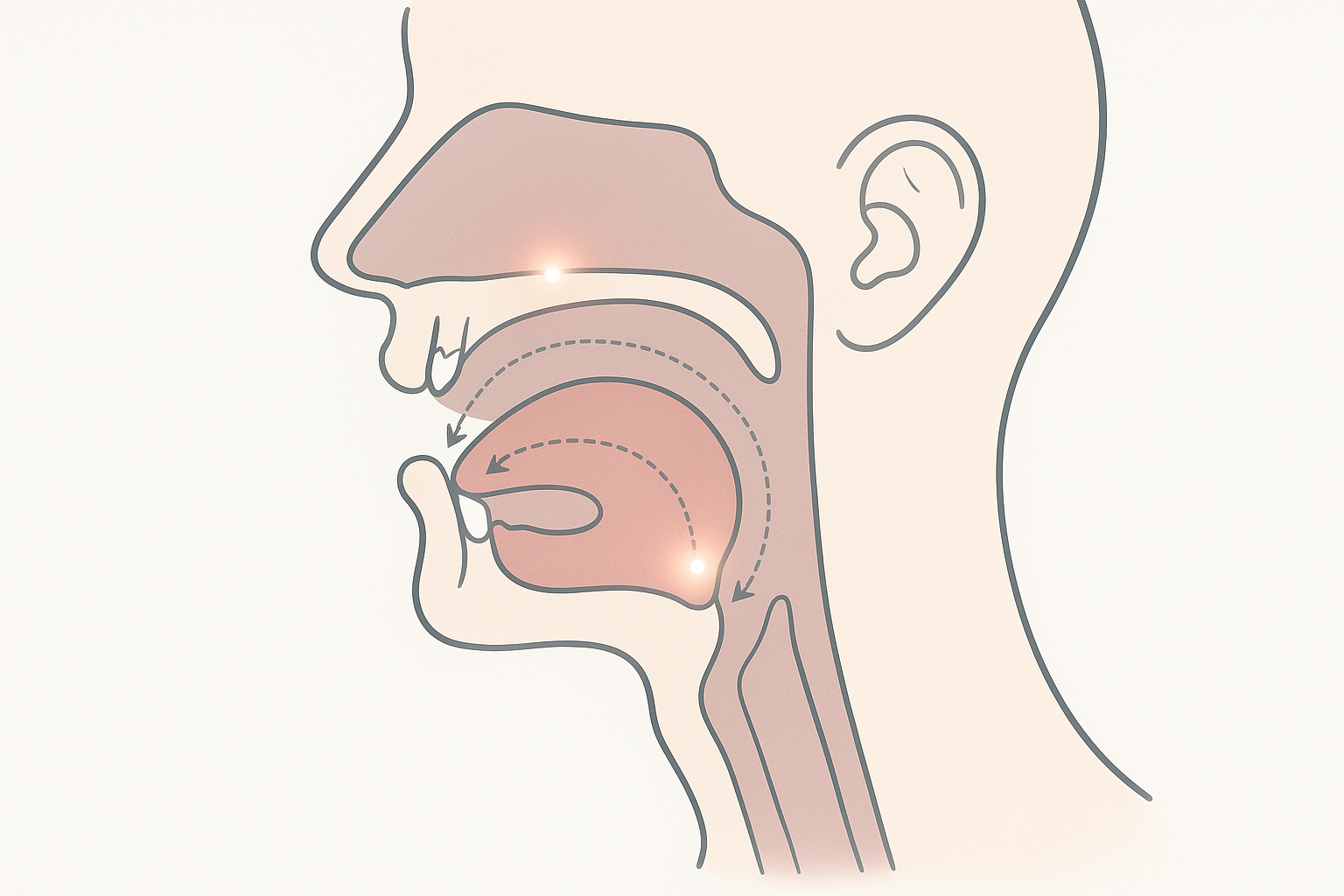

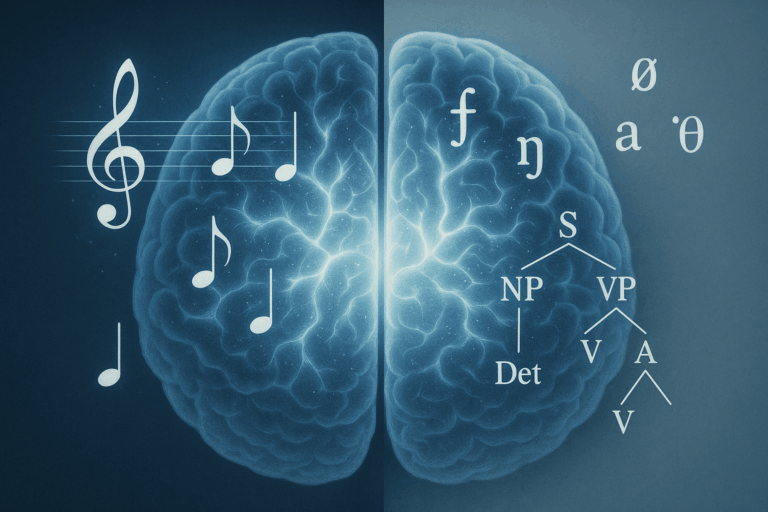

Before any AI can understand language, it must first shatter sentences into pieces through a process called tokenization. This crucial first step is far more complex than it seems, presenting unique linguistic puzzles across different languages, from English contractions to German compound nouns and Chinese text that has no spaces. This invisible labor, where computer science meets linguistics, is the foundational work that powers our entire digital world.