Imagine you’re chatting with an advanced AI. You ask it about the poetry of Li Bai, and it responds with a nuanced analysis of his use of imagery and Daoist themes. You ask it to write a sonnet in the style of Shakespeare about a smartphone, and it delivers a masterpiece. It seems intelligent. It seems to understand. But does it? This question isn’t new. In fact, it’s at the heart of one of modern philosophy’s most famous thought experiments, a puzzle that cuts to the core of what it means to know a language.

In 1980, philosopher John Searle proposed an idea so powerful and intuitive that it continues to shape the debate around artificial intelligence today: the “Chinese Room” argument. It’s a direct challenge to the concept of “Strong AI”—the idea that a sufficiently complex computer program isn’t just a simulation of a mind, but is an actual mind, capable of consciousness, thought, and understanding.

Setting the Scene: Inside the Chinese Room

Searle asks us to imagine a scenario. Let’s put a man—we’ll call him John—in a locked room. John is a native English speaker and does not speak, read, or understand a single word of Mandarin Chinese. He is, from a linguistic standpoint, a complete outsider to the language.

Inside the room with John are a few key items:

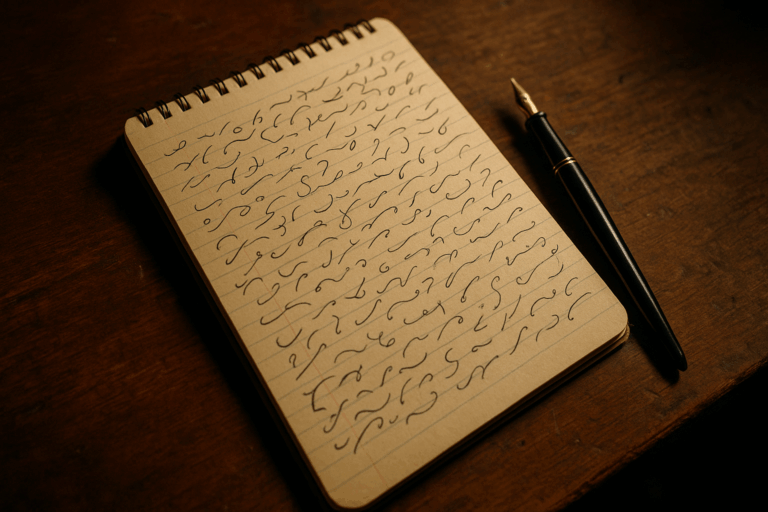

- Baskets of Chinese symbols: These are the raw data, the building blocks of the language. To John, they are just intricate, meaningless squiggles.

- A rulebook, written in English: This is the program. It contains a comprehensive set of instructions that tell John how to manipulate the symbols. For example, a rule might say, “If you receive a slip of paper with the symbol

squiggle-A, go to basket #7, find the symbolsquiggle-B, and pass that back out.”

Now, the experiment begins. Native Chinese speakers outside the room pass slips of paper with questions written in Chinese characters under the door. John’s job is to take the incoming symbols, look them up in his English rulebook, find the corresponding output symbols from his baskets, and pass them back out. He has no idea that the incoming symbols are questions and the outgoing ones are answers.

From the perspective of the Chinese speakers outside, the room is a native speaker. They ask, “What is your favorite color?” (你最喜歡什麼顏色?), and after some shuffling inside, the room passes back a slip that says, “My favorite color is blue” (我最喜歡的顏色是藍色). The room’s answers are grammatically perfect, coherent, and indistinguishable from those of a real person. The room, in essence, has passed the Turing Test for written Chinese.

But here is Searle’s billion-dollar question: Does anyone or anything in this scenario actually understand Chinese? John certainly doesn’t. He’s just shuffling symbols according to a set of rules. He could be replaced by a water wheel or a series of gears. The rulebook and the baskets of symbols don’t understand anything either; they’re just ink and paper. So, where is the understanding?

Syntax vs. Semantics: The Heart of the Argument

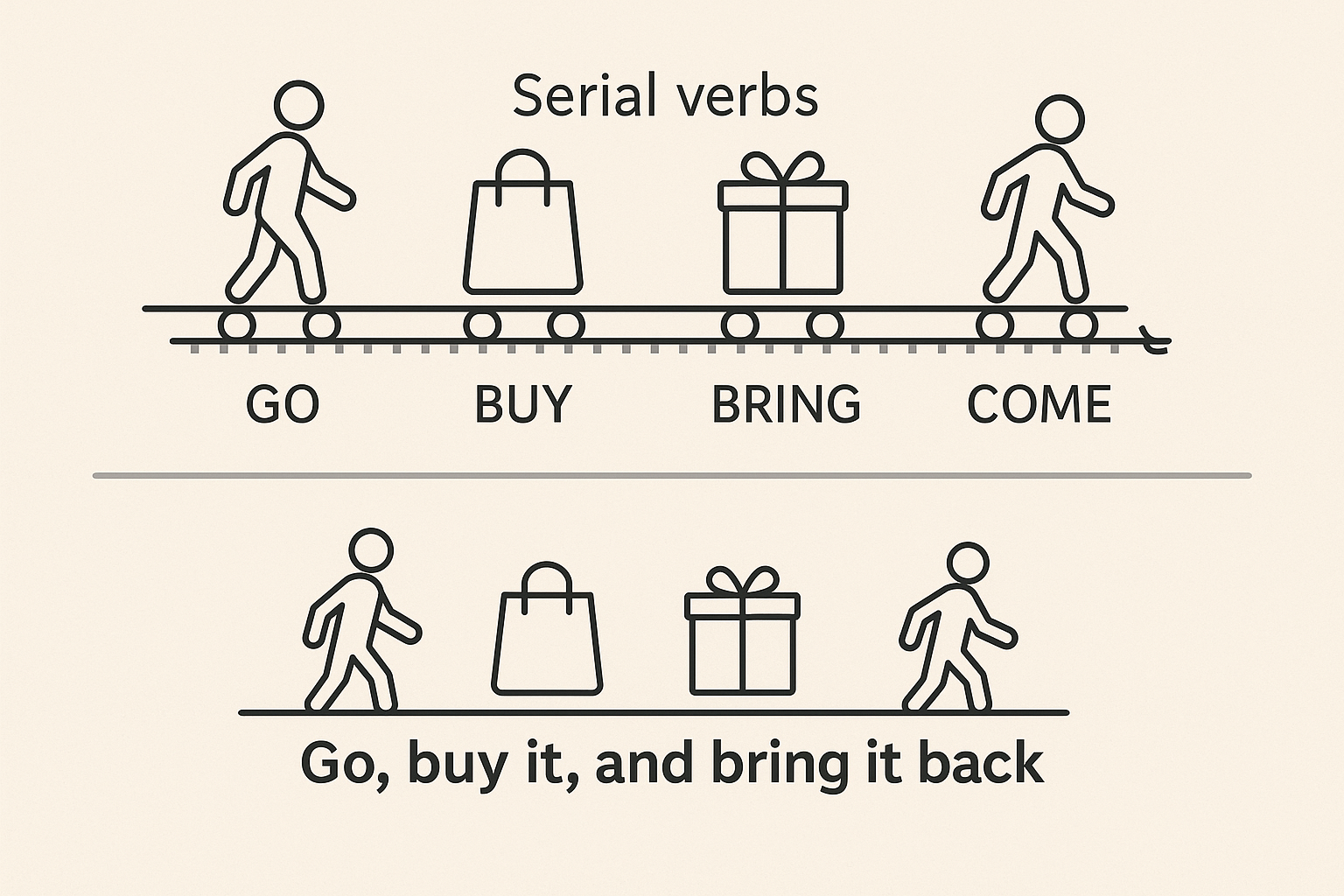

The Chinese Room experiment is a brilliant illustration of a crucial linguistic distinction: the difference between syntax and semantics.

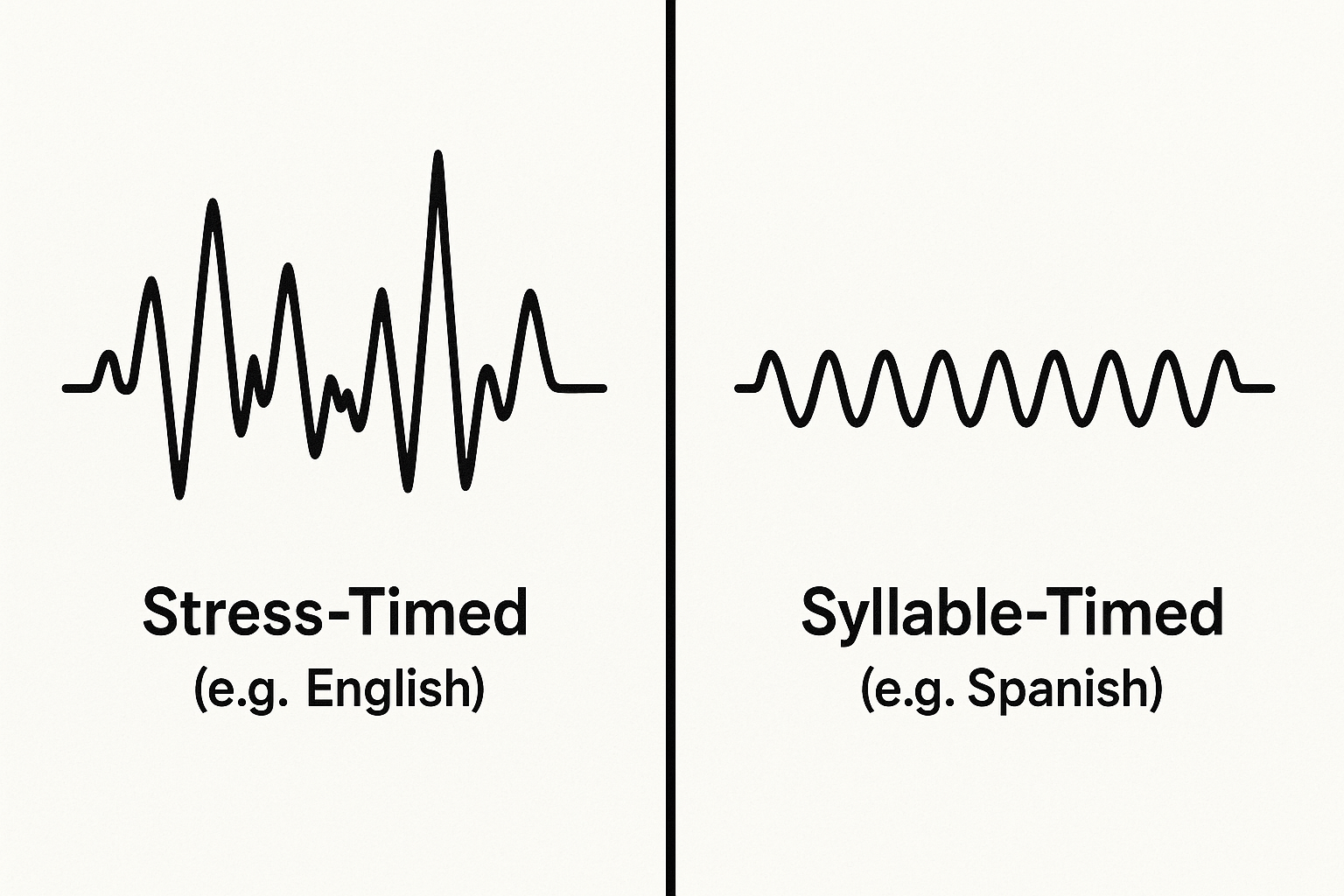

Syntax refers to the formal rules of a language. It’s the grammar, the structure, the correct way to arrange symbols. When John follows his English rulebook, he is performing a purely syntactic task. He is manipulating symbols correctly without any knowledge of their meaning. It’s like correctly diagramming a sentence in a language you don’t speak—you’re following structural rules, not engaging with content.

Semantics, on the other hand, is about meaning. It’s the connection between a symbol (a word) and the concept or object it represents in the real world. The semantic understanding of the Chinese character for “tree” (木) involves having a mental concept of a tree—its bark, its leaves, the way it feels, the shade it provides. It’s the “aboutness” of language.

Searle’s argument is that a computer, like the man in the room, can become a master of syntax but can never, through symbol manipulation alone, achieve semantics. It can process information, but it can’t have “intentionality”—the state of a mind being about something. A computer’s processes are defined by their formal, syntactic structure, but minds are defined by their mental content, their semantics.

Can the Room Fight Back? The Counterarguments

Searle’s argument, while compelling, is far from universally accepted. Philosophers and computer scientists have offered several powerful rebuttals.

The Systems Reply

This is the most famous counterargument. It states that while the man in the room doesn’t understand Chinese, the entire system—the man, the rulebook, the baskets, the room itself—does understand Chinese. Understanding isn’t located in any single component but is an emergent property of the whole system working together. Just as a single neuron doesn’t understand your thoughts, but the system of your brain does, the “Chinese Room system” understands Chinese.

Searle’s witty response is to internalize the system. Imagine the man memorizes the entire rulebook and all the symbols. He can now do all the calculations in his head and can even leave the room. He becomes the entire system. From the outside, he appears to be a fluent Chinese speaker. But does he, personally, understand a word of it? Searle insists the answer is still no.

The Robot Reply

What if we put the computer (the room) inside a robot? This robot could move around, see objects with cameras, and touch things with sensors. Now, the symbols are no longer abstract. The character for “chair” (椅子) could be causally linked to the robot’s visual perception of a chair. This “grounding” of symbols in real-world experience, critics argue, could be the bridge from syntax to semantics.

The Chinese Room in the Age of Large Language Models

Fast-forward to today, and we are all interacting with hyper-advanced Chinese Rooms. Large Language Models (LLMs) like ChatGPT are trained on nearly the entire internet. Their “rulebooks” are not simple instructions but vast, complex neural networks with billions of parameters that map statistical relationships between words and phrases.

When an LLM provides a fluent, contextually appropriate answer, it is performing a spectacularly complex version of what the man in the room does: predicting the most probable next symbol (or word) based on the input it received and the patterns it learned from its training data. It is a master of syntax and correlation on a scale we can barely comprehend.

But the fundamental question remains. Does the LLM understand the poetry it analyzes? Or is it just a sophisticated symbol-shuffler, generating text that mimics understanding? Searle’s argument forces us to be incredibly precise. When an AI says, “I understand your frustration,” it’s not reporting a subjective mental state. It’s generating a syntactically and contextually appropriate response based on patterns in its data where humans used similar phrases.

The Chinese Room doesn’t prove that a machine can never understand. What it does is set a very high bar. It suggests that understanding isn’t just about processing information or producing the right output. It’s about having genuine mental states, consciousness, and a real, subjective connection between symbols and meaning. As we continue to build more powerful AI, Searle’s 40-year-old thought experiment reminds us that the greatest challenges may not be computational, but philosophical, forcing us to look deeper into the mysteries of language, meaning, and the mind itself.