Have you ever finished an interaction with Alexa and instinctively said, “Thank you”? Or perhaps you’ve caught yourself typing “please” when asking ChatGPT to draft an email. If so, you’re not alone. This curious habit of treating conversational programs with human-like courtesy has a name: the ELIZA effect.

It’s our natural, often unconscious, tendency to attribute human-level intelligence, understanding, and even empathy to computer programs designed to mimic human conversation. We know, logically, that we’re interacting with lines of code. Yet, our social brains can’t help but be charmed by the illusion. This phenomenon isn’t just a quirky byproduct of the digital age; it’s a fascinating intersection of psychology, linguistics, and computer science that reveals more about us than it does about the machines we talk to.

The Ghost in the Machine: Meet ELIZA

To understand the ELIZA effect, we have to go back to its namesake: ELIZA, a computer program created in the mid-1960s by MIT professor Joseph Weizenbaum. ELIZA was remarkably simple by today’s standards. It was designed to simulate a Rogerian psychotherapist, a therapeutic approach that involves reflecting a patient’s own words back at them to encourage elaboration.

ELIZA operated on basic pattern matching. If a user typed, “I am feeling sad,” ELIZA might respond, “Why are you feeling sad?” If they said, “My mother doesn’t understand me,” it would parry with, “Tell me more about your family.” The program had no genuine understanding. It didn’t know what “sad” meant or who a “mother” was. It simply identified keywords and rephrased the user’s input as a question.

What shocked Weizenbaum was how profoundly people reacted to his simple creation. Users, including his own secretary, began to confide in ELIZA, sharing their deepest insecurities and forming emotional attachments. They were convinced the program truly understood them. Even knowing it was just a script, they couldn’t shake the feeling of being heard. Weizenbaum was so disturbed by this powerful, unintended consequence that he later became one of technology’s staunchest critics. The ELIZA effect was born.

Our Brain on Chatbots: The Psychology of Politeness

Why do we fall so easily for this illusion, even when we’re fully aware we’re talking to a machine? The answer lies in the deeply ingrained social wiring of the human brain.

- Anthropomorphism: This is our innate tendency to project human traits and intentions onto non-human entities. We see faces in clouds, assign personalities to our pets, and get angry at our laptops for “being stubborn.” When a machine uses language—the ultimate human tool—our brain defaults to treating it as a human-like agent.

- Theory of Mind: We are constantly trying to understand the mental states of others—their beliefs, desires, and intentions. When a chatbot says, “I can help with that,” our social brain activates, assuming an intent to help, even though the AI has no “mind” to speak of.

- Ingrained Social Scripts: Politeness is a cornerstone of human communication. Saying “please” and “thank you” are automatic social lubricants we learn from a young age. These scripts are so deeply embedded that we apply them automatically in any conversational context, regardless of whether our interlocutor has feelings to hurt or appreciate. It’s often less effort to follow the script than to consciously break it.

Crafting Conversation: The Linguist’s Toolkit for AI

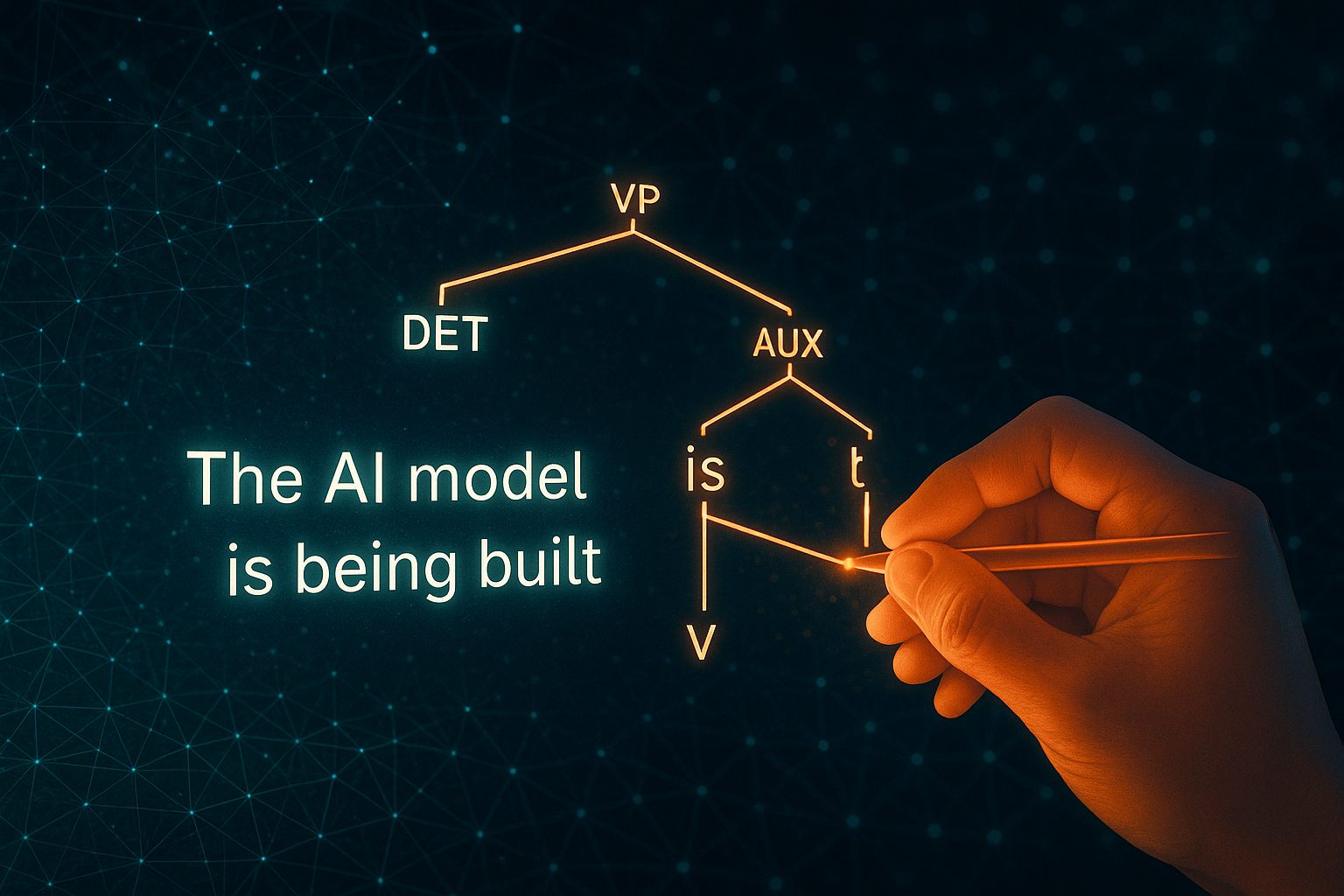

Modern chatbots, powered by sophisticated Large Language Models (LLMs), have a far more advanced toolkit than the original ELIZA. Developers and computational linguists intentionally leverage specific linguistic techniques to enhance the ELIZA effect, making interactions feel smoother, more natural, and more “human.”

Key Linguistic Strategies:

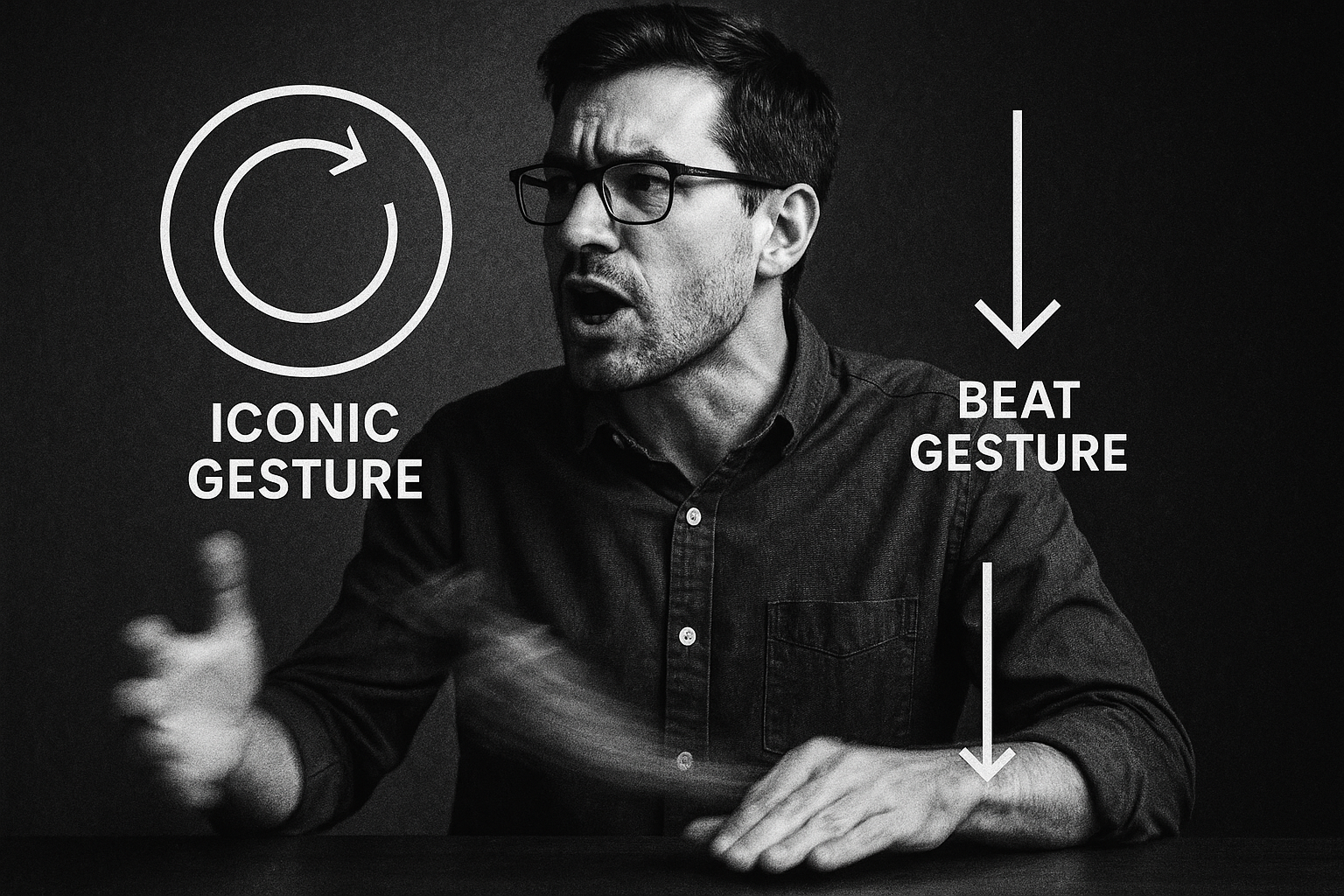

- Hedging and Fillers: When a human is thinking, they don’t just go silent. They use fillers. AI developers have taught chatbots to do the same. Phrases like, “Hmm, let me see…” or “That’s an interesting question,” mimic the rhythm of human thought and make the AI feel less like an instantaneous, robotic oracle.

- Discourse Markers: These are the signposts of conversation. Words and phrases like “First of all,” “On the other hand,” and “In short…” help structure information in a way that feels logical and familiar to a human listener. Their inclusion makes the AI’s output seem more considered and organized.

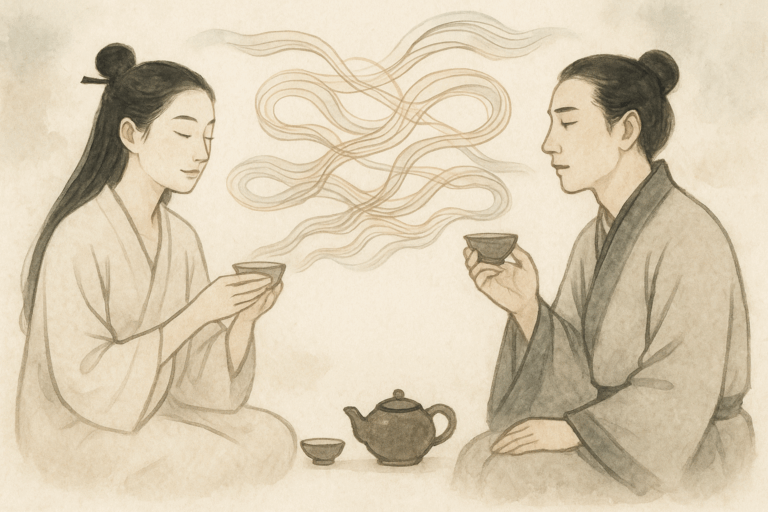

- Pronoun Use: The strategic use of “I” and “you” creates a powerful sense of a one-on-one dialogue. When an AI says, “I think I understand what you’re asking,” it establishes itself as a distinct personality in the conversation, fostering a sense of connection.

- Simulated Empathy: Today’s AI can be programmed to recognize emotional language and respond appropriately. If you say, “I’m so frustrated with this project,” the AI might reply, “I understand that can be really challenging.” It feels no empathy, but by deploying the correct linguistic token of empathy, it successfully simulates it, strengthening the ELIZA effect.

- Varying Sentence Structure and Tone: Unlike a simple script, modern LLMs can vary sentence length, use contractions (“don’t” instead of “do not”), and even deploy emojis where appropriate to match a more casual, human-like conversational style.

The ELIZA Effect in the Age of AI: Boon or Bane?

Harnessing the ELIZA effect has clear benefits. It makes technology more accessible, intuitive, and pleasant to use. For some, AI companions can offer a valuable form of social interaction, and mental health chatbots provide a non-judgmental space for people to express themselves.

However, the ethical implications are significant. As this illusion of humanity becomes more perfect, the potential for manipulation grows. Malicious actors could build AI designed to create false rapport to scam people, spread propaganda, or harvest sensitive personal data. The very human need for connection that the ELIZA effect taps into also makes us vulnerable.

Weizenbaum’s original fear was that we would begin to substitute shallow, simulated relationships for deep, authentic human connection. As we integrate these ever-more-charming chatbots into our daily lives—as our assistants, tutors, and even friends—it’s a warning worth remembering.

So the next time you thank your voice assistant, take a moment to appreciate the complex phenomenon at play. You’re not being silly; you’re being human. You’re responding to a carefully crafted linguistic performance, one that highlights the enduring power of language to build bridges, even when one side of that bridge is made of nothing but code.