****

We marvel at the eloquence of modern AI. We ask it to draft an email, compose a sonnet, or explain quantum physics, and it responds with startling fluency. It feels like magic, as if a disembodied mind has simply absorbed the entirety of the internet and learned to talk. But this “magic” has a secret ingredient, a vast, hidden scaffolding built by human hands: linguistic annotation.

Before any Large Language Model (LLM) like GPT can understand the difference between a noun and a verb, or detect the subtle sting of sarcasm, a human has to teach it. This process is the invisible engine of the AI revolution, a painstaking craft performed by thousands of linguistic annotators around the world. These are the unsung heroes who meticulously transform raw, messy human language into structured, machine-readable data.

What is Linguistic Annotation? The Digital Scribe’s Craft

At its core, linguistic annotation is the practice of adding labels—or metadata—to a piece of text. These labels provide explicit information about the language’s structure, meaning, and function. Think of it as creating a detailed instruction manual for language itself. While we humans process this information intuitively, a machine needs it all spelled out.

The annotations can be simple or incredibly complex. Some common types include:

- Part-of-Speech (POS) Tagging: Identifying each word as a noun, verb, adjective, preposition, etc. This is the most fundamental level of annotation.

- Named Entity Recognition (NER): Finding and classifying entities like people (“Ada Lovelace”), organizations (“Google”), and locations (“Paris”).

- Sentiment Analysis: Labeling a text’s emotional tone as positive, negative, or neutral.

- Coreference Resolution: Linking pronouns to the nouns they refer to. In “The cat chased the mouse, and it was fast,” annotators clarify whether “it” refers to the cat or the mouse.

Imagine the sentence: “The weary programmer drank her third coffee at midnight.”

An annotator might tag this as:

[The/DET] [weary/ADJ] [programmer/NOUN] [drank/VERB] [her/PRON] [third/ADJ] [coffee/NOUN] [at/PREP] [midnight/NOUN].

This simple act of labeling, when repeated millions of times, creates a massive dataset that teaches an AI the foundational patterns of a language.

Enter the Treebank: Mapping the Grammar of Language

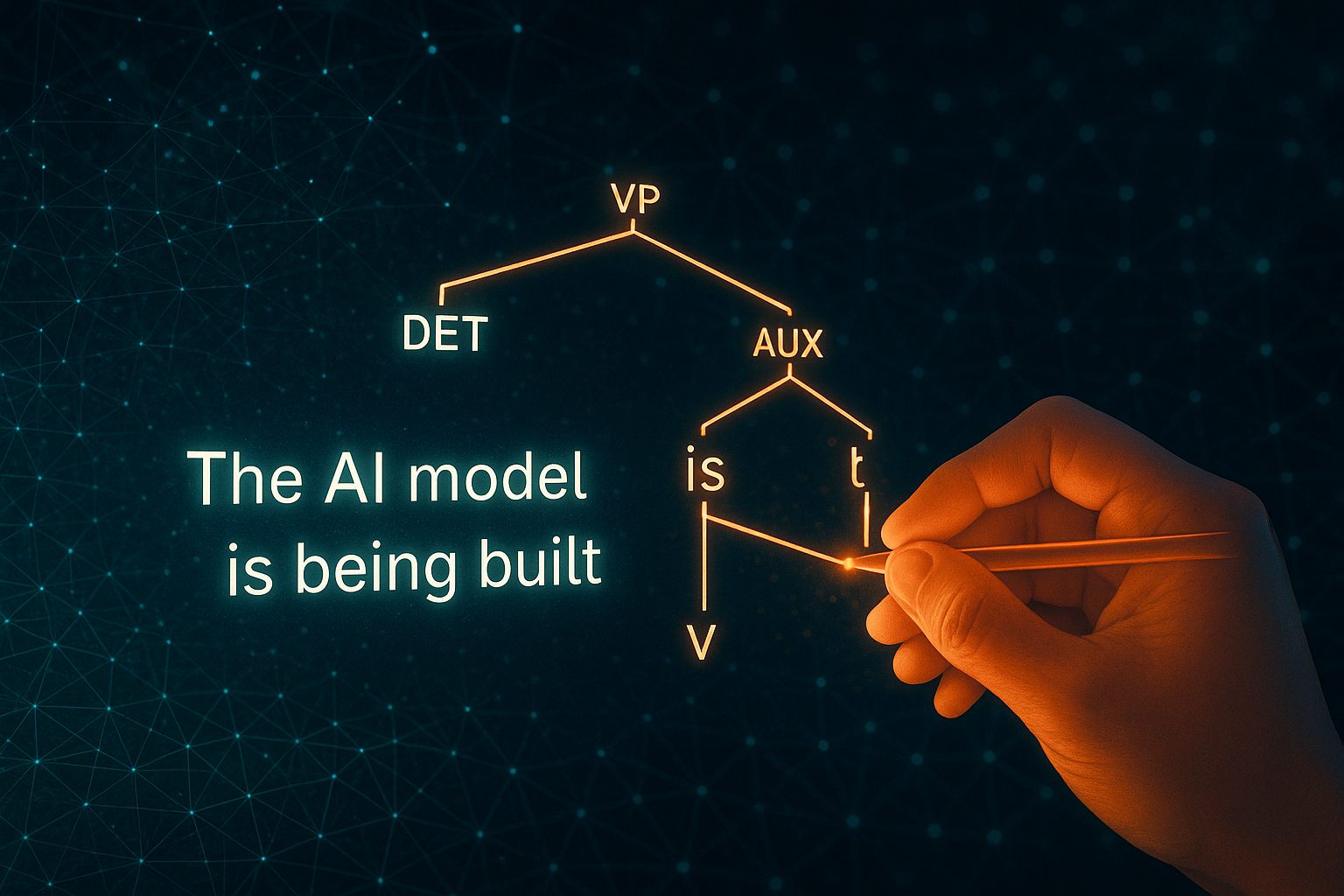

One of the most profound forms of annotation is a process called “treebanking.” If POS tagging is about identifying the words, treebanking is about mapping the relationships between them. Annotators create a “parse tree”—a hierarchical diagram that breaks a sentence down into its grammatical components, much like the sentence diagrams you may have drawn in school.

These trees show how words group together to form phrases (like noun phrases or verb phrases) and how those phrases combine to create a meaningful sentence. A treebank is a large corpus of text where every single sentence has been converted into one of these structural maps.

Consider the sentence “The man with the telescope saw a star.” A treebank annotation would show that “with the telescope” modifies “the man,” not that he “saw” the star using the telescope. This seemingly small distinction is crucial. By analyzing thousands of these trees, an AI learns the deep syntactic rules that govern how we build sentences, allowing it to generate its own grammatically correct and coherent text.

The Annotator’s Dilemma: More Art Than Science

This work is far from mechanical. Language is a product of human culture—it’s ambiguous, contextual, and wonderfully messy. Every day, linguistic annotators face complex judgment calls that no simple rulebook can solve.

Navigating Syntactic Ambiguity

Take the classic example: “I saw a man on a hill with a telescope.”

Who has the telescope? Is it you, observing the man? Is it the man, who is holding it? Or is the telescope itself sitting on the hill? An annotator must use their real-world knowledge and interpret the most likely meaning before structuring the parse tree. The decision they make becomes a lesson for the AI, teaching it about plausibility and context.

Decoding Semantic and Pragmatic Nuance

The challenges go beyond grammar. How do you tag sarcasm? When a friend sees your disastrous attempt at baking and says, “Wow, you’re a real pastry chef,” the literal meaning is positive, but the intended sentiment is the exact opposite. An annotator must decide how to label this, often relying on surrounding text or shared cultural knowledge to make the call.

Idioms and cultural references present similar hurdles. A phrase like “he kicked the bucket” has nothing to do with pails. Annotators must identify it as a single idiomatic unit meaning “he died.” Without this human guidance, an AI would be lost in a sea of literal, nonsensical interpretations.

The Human Foundation of the AI Revolution

The work of these annotators forms the very bedrock upon which our AI-powered world is built. An LLM is not an “oracle” that mysteriously understands language; it is a pattern-matching engine of unimaginable scale, and the patterns it recognizes were first traced by human hands.

The quality and diversity of this human input are paramount. If the training data is annotated exclusively by speakers of one dialect or from one cultural background, the resulting AI will inherit their biases. It might struggle with different accents, misunderstand regional idioms, or perpetuate harmful stereotypes found in the data. That’s why projects to create annotated datasets for under-resourced languages and diverse dialects are so critical for building more equitable and inclusive technology.

This process highlights a beautiful irony: to create a powerful artificial intelligence, we must first deeply investigate our own human intelligence. The disagreements between annotators—moments where two experts can’t agree on the “correct” tag—are often the most valuable data points of all. They reveal the true, inherent ambiguity of language, teaching the model that sometimes there isn’t one right answer.

So the next time you interact with an AI and marvel at its linguistic prowess, take a moment to appreciate the unseen world beneath the surface. Remember the global community of linguists, scholars, and language experts who spent countless hours diagramming sentences, debating the meaning of a word, and carefully labeling the nuance in a phrase. The AI revolution isn’t just about code and algorithms; it’s a testament to the depth, complexity, and richness of human language, carefully curated and passed on, one annotation at a time.

—

**